System Failure: 7 Shocking Causes and How to Prevent Them

Ever experienced a sudden crash, blackout, or complete breakdown of a critical service? That’s system failure in action—unpredictable, disruptive, and often costly. In today’s hyper-connected world, understanding why systems fail is not just technical curiosity; it’s essential for survival.

What Is System Failure? A Clear Definition

At its core, a system failure occurs when a system—be it mechanical, digital, organizational, or biological—ceases to perform its intended function. This can range from a minor glitch to a catastrophic collapse. The impact varies, but the root cause often lies in overlooked vulnerabilities.

Defining ‘System’ in Modern Context

The term ‘system’ is broad. It can refer to a computer network, a power grid, a healthcare delivery model, or even a government bureaucracy. A system is any interconnected set of components working toward a common goal. When one part fails, the ripple effect can destabilize the entire structure.

- Technical systems: Software, hardware, networks

- Organizational systems: Business processes, supply chains

- Natural systems: Ecosystems, climate patterns

Understanding the scope helps us anticipate where failure might occur.

Types of System Failure

Not all system failures are created equal. They can be categorized based on severity, origin, and duration:

- Partial failure: Only some components stop working (e.g., a single server in a data center).

- Total failure: Complete shutdown (e.g., a nationwide power outage).

- Latent failure: A hidden flaw that surfaces under stress (e.g., software bug triggered by high traffic).

- Active failure: Immediate and observable (e.g., a hard drive crash).

Recognizing these types helps in diagnosing and responding appropriately.

Why System Failure Matters in the Digital Age

We rely on complex systems more than ever. From online banking to air traffic control, a single point of failure can have global consequences. According to a report by Gartner, the average cost of IT downtime is $5,600 per minute, which adds up to over $300,000 per hour. This makes system failure not just a technical issue, but a financial and reputational risk.

“Failure is not an option.” — Gene Kranz, NASA Flight Director during Apollo 13. While dramatic, this mindset underscores the need for resilience in critical systems.

Common Causes of System Failure

Behind every system failure lies a chain of events—often preventable. Identifying common causes is the first step toward building more robust systems.

Hardware Malfunctions

Physical components degrade over time. Hard drives fail, circuits overheat, and power supplies short-circuit. Even with redundancy, hardware remains a leading cause of system failure.

- Wear and tear from continuous operation

- Manufacturing defects

- Environmental factors like heat, humidity, or dust

For example, in 2012, an Amazon Web Services (AWS) outage was triggered by a power failure in a Virginia data center, affecting major sites like Netflix and Reddit. AWS Status Report

Software Bugs and Glitches

Code is written by humans—and humans make mistakes. A single line of faulty code can cascade into a full system failure. Software bugs are especially dangerous because they may remain dormant until triggered by specific conditions.

- Memory leaks that consume system resources

- Unhandled exceptions causing crashes

- Concurrency issues in multi-threaded applications

The 1999 Mars Climate Orbiter crash, which cost $327 million, was due to a simple unit conversion error between metric and imperial systems. NASA Mars Mission Failure

Human Error

One of the most underestimated causes of system failure is human error. Misconfigurations, accidental deletions, and poor decision-making under pressure can bring down even the most advanced systems.

- Incorrect database queries that lock up servers

- Unauthorized access due to weak password policies

- Failure to follow standard operating procedures

A 2020 study by IBM found that human error was responsible for nearly 23% of all security breaches, many of which led to system outages. IBM Cost of a Data Breach Report

System Failure in Critical Infrastructure

When critical infrastructure fails, the consequences can be life-threatening. Power grids, transportation networks, and healthcare systems are prime examples of high-stakes environments where failure is not an option.

Power Grid Failures

Electricity is the lifeblood of modern society. A failure in the power grid can paralyze cities, disrupt communications, and endanger lives.

- Overloaded circuits leading to blackouts

- Cyberattacks targeting control systems

- Natural disasters damaging transmission lines

The 2003 Northeast Blackout affected 55 million people across the U.S. and Canada. It was caused by a software bug in an alarm system and poor monitoring practices. NERC Blackout Report

Transportation System Collapse

From air traffic control to railway signaling, transportation systems depend on flawless coordination. A single failure can lead to delays, accidents, or fatalities.

- Air traffic control system failure causing flight cancellations

- Train signaling errors leading to collisions

- GPS spoofing disrupting navigation

In 2015, a software update glitch grounded thousands of flights in the U.S. when the FAA’s Notice to Airmen (NOTAM) system crashed. FAA NOTAM System

Healthcare System Breakdowns

Hospitals rely on integrated systems for patient records, diagnostics, and life support. A system failure here can literally be a matter of life and death.

- Electronic health record (EHR) system crashes

- Medical device malfunctions due to software errors

- Ransomware attacks locking down hospital networks

In 2017, the NHS in the UK was hit by the WannaCry ransomware attack, which disrupted services in over 80 hospitals. Thousands of appointments were canceled. BBC Coverage of NHS Cyberattack

Cybersecurity and System Failure

In the digital era, cyber threats are among the most potent causes of system failure. Malicious actors exploit vulnerabilities to disrupt, steal, or destroy.

Ransomware Attacks

Ransomware encrypts critical data and demands payment for its release. These attacks often target organizations with weak security protocols.

- Colonial Pipeline attack in 2021 forced a shutdown of fuel supply across the U.S. East Coast

- Hospitals, schools, and local governments are frequent targets

- Attackers use phishing emails to gain initial access

The Colonial Pipeline incident cost the company nearly $5 million in ransom and caused widespread panic buying. CISA on Colonial Pipeline Attack

Distributed Denial of Service (DDoS) Attacks

DDoS attacks flood a system with traffic, overwhelming its capacity and causing it to crash.

- Used to target websites, online services, and cloud platforms

- Botnets of compromised devices generate massive traffic

- Can be politically or financially motivated

In 2016, the Mirai botnet attacked Dyn, a major DNS provider, taking down Twitter, Spotify, and Reddit. Dyn DDoS Attack Summary

Insider Threats

Not all threats come from outside. Employees or contractors with access can intentionally or accidentally cause system failure.

- Disgruntled employees deleting critical data

- Accidental exposure of credentials

- Privilege abuse for unauthorized access

A 2022 report by Cybersecurity Insiders found that 68% of organizations feel vulnerable to insider threats. Cybersecurity Insiders Report

Organizational and Management Failures

Sometimes, the system isn’t the problem—the people running it are. Poor leadership, lack of training, and flawed processes can lead to system failure even with perfect technology.

Lack of Redundancy and Backup Plans

Resilience comes from redundancy. Systems without backup components or failover mechanisms are vulnerable to single points of failure.

- No secondary power sources during outages

- Failure to maintain offsite data backups

- Over-reliance on a single vendor or service

After Hurricane Katrina, many New Orleans businesses lost all data because backups were stored locally and destroyed in the flood.

Poor Communication and Coordination

In complex systems, communication is key. When teams don’t share information, small issues escalate into major failures.

- IT and operations teams working in silos

- Lack of incident response protocols

- Failure to escalate critical warnings

The Challenger space shuttle disaster in 1986 was partly due to engineers’ concerns about O-rings being ignored by NASA management. NASA Challenger Disaster Report

Failure to Adapt to Change

Technology evolves rapidly. Organizations that fail to update their systems or train staff risk obsolescence and failure.

- Using outdated software with known vulnerabilities

- Resisting digital transformation

- Ignoring user feedback and performance metrics

Blockbuster’s refusal to adapt to streaming led to its downfall, while Netflix thrived by embracing change.

Case Studies of Major System Failures

History is filled with cautionary tales of system failure. Studying these cases provides valuable lessons for prevention.

The 2003 Northeast Blackout

One of the largest blackouts in history, affecting eight U.S. states and parts of Canada. It began with a software bug in FirstEnergy’s control room, which failed to alert operators to transmission line overloads.

- Root cause: Inadequate system monitoring and tree contact with power lines

- Impact: 55 million people without power for up to two days

- Lesson: Real-time monitoring and maintenance are critical

The Knight Capital Trading Glitch (2012)

A software deployment error caused Knight Capital to lose $440 million in just 45 minutes. A legacy code switch was accidentally activated, triggering a flood of unintended trades.

- Root cause: Poor software testing and deployment practices

- Impact: Nearly bankrupted the company

- Lesson: Automated systems need rigorous testing and kill switches

This incident led to stricter regulations on algorithmic trading. SEC Report on Knight Capital

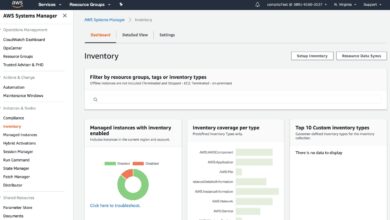

Facebook Outage of 2021

On October 4, 2021, Facebook, Instagram, and WhatsApp went offline for nearly six hours. The cause? A misconfigured Border Gateway Protocol (BGP) update that disconnected Facebook’s servers from the internet.

- Root cause: Human error during routine maintenance

- Impact: Global communication disruption, $60 million in lost ad revenue

- Lesson: Even tech giants are vulnerable to simple mistakes

The outage highlighted the fragility of centralized digital platforms. Facebook Engineering Post-Mortem

How to Prevent System Failure

While not all failures can be avoided, many can be mitigated through proactive strategies and robust design principles.

Implement Redundancy and Failover Mechanisms

Redundancy ensures that if one component fails, another can take over seamlessly.

- Use redundant servers, power supplies, and network paths

- Design systems with automatic failover capabilities

- Test failover procedures regularly

Data centers often use N+1 or 2N redundancy models to ensure uptime.

Conduct Regular System Audits and Testing

Proactive testing identifies weaknesses before they cause failure.

- Perform penetration testing for cybersecurity

- Run stress tests to simulate high-load scenarios

- Audit code and configurations for compliance

Regular audits help maintain system integrity and regulatory compliance.

Invest in Employee Training and Culture

People are the first line of defense. Training staff to recognize risks and respond appropriately is crucial.

- Teach best practices for cybersecurity and system management

- Foster a culture of accountability and transparency

- Encourage reporting of near-misses and potential issues

Google’s Site Reliability Engineering (SRE) model emphasizes human factors in system reliability. Google SRE Principles

The Future of System Resilience

As systems grow more complex, so must our approaches to preventing failure. Emerging technologies and philosophies are shaping a more resilient future.

AI and Predictive Maintenance

Artificial intelligence can analyze vast amounts of data to predict failures before they happen.

- AI models detect anomalies in system behavior

- Predictive analytics forecast hardware degradation

- Automated alerts allow preemptive action

Companies like Siemens and GE use AI to monitor industrial equipment and reduce downtime.

Decentralized Systems and Blockchain

Decentralization reduces single points of failure. Blockchain technology, for example, distributes data across nodes, making it harder to disrupt.

- Eliminates central control points vulnerable to attack

- Enables peer-to-peer resilience

- Used in secure voting, supply chain tracking, and finance

Ethereum and other decentralized platforms aim to create tamper-proof systems. Ethereum Official Site

The Role of Regulation and Standards

Government and industry standards play a vital role in enforcing reliability.

- ISO 27001 for information security management

- NIST Cybersecurity Framework for critical infrastructure

- GDPR for data protection and accountability

Compliance with these standards reduces the risk of system failure due to negligence.

What is system failure?

System failure occurs when a system—technical, organizational, or biological—stops functioning as intended, leading to disruption, downtime, or loss of service.

What are the most common causes of system failure?

The most common causes include hardware malfunctions, software bugs, human error, cyberattacks, lack of redundancy, and poor management practices.

Can system failure be prevented?

While not all failures can be prevented, many can be mitigated through redundancy, regular testing, employee training, and robust cybersecurity measures.

How did the Facebook 2021 outage happen?

The Facebook outage was caused by a misconfigured BGP update that disconnected its servers from the internet, due to human error during maintenance.

What is the cost of system failure?

The cost varies by industry, but Gartner estimates IT downtime costs an average of $5,600 per minute, with major outages costing millions in lost revenue and recovery.

System failure is an inevitable risk in any complex environment. However, by understanding its causes—from hardware flaws to human error—and learning from past disasters, we can build more resilient systems. Prevention lies in redundancy, proactive monitoring, strong cybersecurity, and a culture of accountability. As technology evolves, so must our strategies to protect the systems we depend on. The future belongs to those who prepare, not just react.

Further Reading: